This time it’s Salesforce via Gainsight

Once again, the Salesforce Critical Incident Center has activated and issued a Security Advisory regarding “unusual activity involving Gainsight-published applications.” Apparently, attackers compromised Gainsight and obtained OAuth tokens for each of Gainsight’s clients.

And, once again, the threat actors claiming responsibility are Scattered Lapsus$ Shiny Hunters (SLSH).

This story, however, starts long before Salesforce’s advisory. Earlier this year, there was a massive increase in adversaries targeting Salesforce, all with allegedly “different” threat actors, conducting sophisticated attacks.

Using all sorts of techniques, from vishing to compromising the supply chain, these threat actors found paths to obtain their treasure: full access to the client’s Salesforce environment. From there, they hit the jackpot.

One notorious incident, which began in March and continued through June 2025, is the Salesloft Drift application breach. We won’t get into much detail here, since it’s already covered extensively here and here, but SLSH claims they breached Salesloft, and because many customers had Drift integrated into their environments, this led to full compromise of these clients via the Drift app.

Understanding the Gainsight Incident

While the initial public disclosure occurred on November 19, 2025, this breach unfolded across three months, starting in August 2025. After the Salesloft Drift incident, many organizations sought the assistance of security professionals, consultants and experts to provide them with the appropriate response. Those responses varied, reflecting differences in available visibility and investigation scope. As you may know, many organizations do not retain data for long periods, including SaaS data, due to storage and cost considerations. Collecting and analyzing long-term SaaS telemetry remains a challenge across industries.

This type of supply chain intrusion reflects the sophistication of the threat actor, not a failure of any single organization. These attacks often exploit the same structural challenges most companies face today: limited SaaS log retention, gaps in cross-application visibility, and the difficulty of correlating activity across identity, API, and managed package layers. Environments with these constraints are not doing anything wrong. They are operating within the practical limits of modern SaaS ecosystems, which advanced actors like SLSH are specifically designed to exploit.

Without jumping to any conclusions, Gainsight was targeted in the attackers’ Stage 2 – which resulted in the extraction of credentials. The available evidence indicates that attackers continued their activity over time, leading to the attackers’ Stage 3 – issuing refresh tokens for up to 285 Salesforce instances linked to Gainsight, which provided API-level access without the need of MFA (duh, these are refresh tokens).

Salesforce claims to have detected this breach through the anomalous API call patterns emerging from this integration, specifically queries originating from non-whitelisted IP addresses, including Tor exit nodes (a method consistent with SLSH’s broader operational patterns).

Get caught up. Watch our 30-minute webinar on how other recent Salesforce campaigns unfolded, including activity linked to UNC6395 and UNC6040, and what you can do about it HERE.

Salesforce’s response to the Gainsight-related activity

Upon detection, Salesforce immediately disconnected Gainsight-published applications, with a security advisory announcement sent to most of the relevant parties. By that point, the attackers had already used the OAuth tokens that were issued through the integration.

What we know so far about the Gainsight-related Salesforce activity

ShinyHunters claim that they have had access to Gainsight infrastructure using the stolen tokens from the Salesloft breach since August 2025.

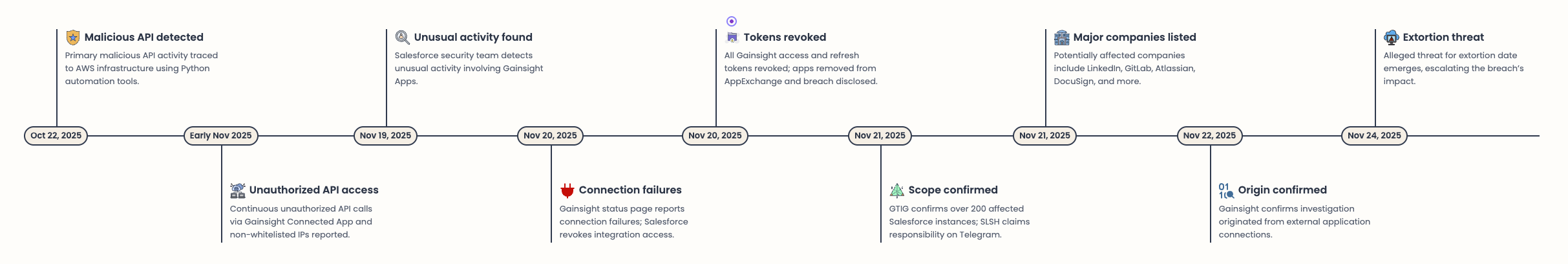

October 22-23, 2025

Primary malicious API activity detected with IP address 3[.]239[.]45[.]43, followed by the user agent Python/3.11 aiohttp/3.13.1, clearly indicating the leverage of automation tools as well as AWS infrastructure.

Early November 2025

Reports claim continuous unauthorized API access using Gainsight Connected App as well as API calls coming from non-whitelisted IP addresses (a pattern consistent with earlier activity).

November 19, 2025

The Salesforce security team detects “unusual” activity involving Gainsight Apps.

November 20, 2025 - 03:35 UTC

The Gainsight status page reports connection failures, and behind the scenes, Salesforce begins revoking integration access.

Throughout the day, all active access and refresh tokens associated with Gainsight are revoked, and Gainsight apps are “temporarily” removed from Salesforce AppExchange. The initial public disclosure of the breach is issued, followed by an official security advisory.

November 21, 2025

GTIG confirms “more than 200 potentially affected Salesforce instances.” By this time, SLSH (Scattered Lapsus$ ShinyHunters) claims responsibility in their Telegram channel.

This is followed by a potential list of major companies affected by this:

- MalwareBytes

- GitLab

- Atlassian

- F5

- DocuSign

- SonicWall

- Palo Alto Networks (including reported threats to Unit42)

Plot twist: Crowdstrike fired an employee with ties to SLSH for allegedly selling access to their systems.

November 22, 2025

Gainsight confirms the investigation “originated from applications' external connection.”

November 24, 2025

A threat, allegedly, for the extortion date.

Potential impact on Salesforce Customers

While there’s currently no estimate or confirmation of the full impact this attack may have caused, our team learned the application, and these are the assumed outcomes:

Full Access to Sensitive Salesforce Objects

Gainsight commonly receives full permissions to sensitive Salesforce objects, such as Accounts, Contacts, Cases, Opportunities and more, sometimes with full read/edit capabilities. A successful operation could therefore allow:

- Full exfiltration of sensitive data

- Manipulation or masking of data, making it unusable

Exposure of Gainsight customer data

Attackers could also access Gainsight-specific objects containing customer data, such as:

- Timeline notes

- CTAs

- Health scorecards

- Tasks

This means customers of affected instances could have had their data exposed.

Execution of Gainsight Apex Classes

Mitiga Labs has learned that some Gainsight permission sets allow the execution of Gainsight Apex classes. This means that if an attacker gets that user, they can potentially run code indirectly.

API enablement for fast extraction

Most Gainsight service users have the API enabled, which allows attackers to extract efficiently, rapidly, and at high volumes.

As seen by the IOCs, this is further supported by the Python user agent, which is consistent with automated activity

Admin-Level Access in Some Configurations

Just as it sounds, in certain deployments, Gainsight users have admin-level access for excessive, broad, and powerful permissions that significantly amplify the impact of the compromise.

Lessons learned and recommended detections

The Mitiga incident response team’s involvement in recent cases allowed us to effectively advise with proper key tasks to follow. Criticality may vary, but the chronological order is true, which means the first few should already be implemented across your organization!

Blocklist. IP addresses that have already been flagged in the previous campaign and the current one should be added to your blocklist anywhere possible, especially infrastructure and identity. There’s a bold usage of Tor exit nodes as well as VPN and proxy services commonly used to mask origin. Follow the Salesforce Indicators of Compromise list, attributed to the Gainsight incident.

Patient zero is nice, but don’t forget one, two, and three. While revoking access for the root cause entity is great and, of course, highly valuable, don’t forget to do the same for the others. Forensically examine your environment, follow the user transactions, the interactions with other identities, session identifiers, and basically anything that could lead to the adversary laterally moving across your organization and even worse—escalating privileges.

Remediation and Recovery. This is almost always forgotten. After fully remediating and making sure no blind spots are left, it’s time to recover with proactive and continuous monitoring. This is a key approach.

Package deployment. For managed package vetting, delay non-critical updates 2-3 weeks to allow community testing and security researcher analysis. This would minimize your potential to lose in the next “battle.”

Package maintenance. Installing is nice, but “install and forget” is dead. It fails when it comes to supply chain threats. Continuously oversee these packages. Including those quarterly inventory reviews? Yes, include even those less-thought-of packages.

Engineer your detections

Creating the detection is nice, but you need to constantly evolve your detections. Our approach in Mitiga is to continuously learn your environment, tuning and determining if the detection fits your environment. And you should do the same.

Key detections (Pseudocode) for Event Log Files in Salesforce to keep an eye out for:

Managed Package Installation

# Extract relevant fields from JSON

For each record in dataset:

IS_SUCCESSFUL = extract_value(json, "IS_SUCCESSFUL")

PACKAGE_NAME = extract_value(json, "PACKAGE_NAME")

IS_MANAGED = extract_value(json, "IS_MANAGED")

# Build event description based on conditions

EVENT_DESCRIPTION = "New "

If IS_MANAGED == "1":

EVENT_DESCRIPTION += "Managed"

Else:

EVENT_DESCRIPTION += "Unmanaged"

EVENT_DESCRIPTION += " Package "

# Set severity (always LOW)

SEVERITY = "LOW"

# Determine threat detection flag

If IS_SUCCESSFUL == "1" AND IS_MANAGED == "1":

IS_ALERT = True

Else:

IS_ALERT = False

Impossible Traveler

# Build the logic that applies to your environment. The general advice here is:

For each identity, grab 90 days of data and analyze geographical location.

If COMMON_LOCATION = TRUE; pass

Else:

# Identify the relevant events, successful logins only.

EVENT_TYPE = 'Login' & LOGIN_STATUS = 'LOGIN_NO_ERROR'

# Time frame of 24 hours is required here

TIMEFRAME = 24H

# Fetch properties based on the enrichment of IP addresses

# 1st Login

OLD_LOCATION_LOGIN = col_ip_addr_location

OLD_IP_ADDRESS = col_ip_addr

OLD_TIMESTAMP = col_timestamp

# 2nd Login

NEW_LOCATION_LOGIN = col_ip_addr_location

NEW_IP_ADDRESS = col_ip_addr

NEW_TIMESTAMP = col_timestamp

# Calculate distance between each geographical location using approximate pre-set data = COL_DISTANCE_IN_KMS

# Calculate time different between old and new timestamps = COL_TIME_DIFF_IN_HOURS

# Measure distance between each geographical distance

COL_IS_TRAVEL_POSSIBLE,

when(

(col(COL_DISTANCE_IN_KMS) / lit(rough_travel_speed_per_hour = '800')) <= col(COL_TIME_DIFF_IN_HOURS),

True,

).otherwise(False),

# Raise alert

If True: Alert();

Else:

pass;

Anomalous User Agent Observation

# Use an internal engine for classifying user agents abnormality.

# Flag automation/scripting/known IOC related user agents.

# STEP 1: Extract relevant fields from event logs

For each record in dataset:

user_id = extract_value(json, "USER_ID")

user_name = extract_value(json, "USER_NAME")

uri = extract_value(json, "URI")

login_status = extract_value(json, "LOGIN_STATUS")

user_agent = extract_value(json, "USER_AGENT")

timestamp = extract_value(json, "TIMESTAMP")

src_ip = extract_value(json, "SRC_IP")

# STEP 2: Filter for successful OAuth token acquisition events

oauth_events = filter records where:

uri contains "/services/oauth2/token"

AND login_status == "LOGIN_NO_ERROR"

If oauth_events is empty:

return empty dataset with columns:

acquisition_pattern = ""

token_count = 0

user_agent_type = ""

# STEP 3: Define suspicious user agent indicators (SLSH)

SUSPICIOUS_USER_AGENTS = [

"python-requests", "aiohttp", "curl", "wget", "httpclient",

"okhttp", "PostmanRuntime", "Java/", "Go-http-client"

]

# STEP 4: Classify user agent type

For each record in oauth_events:

If user_agent matches any pattern in SUSPICIOUS_USER_AGENTS:

user_agent_type = "Automated Tooling"

Else if user_agent is missing or empty:

user_agent_type = "Missing User Agent"

Else:

user_agent_type = "Browser"

# STEP 5: Filter suspicious events

suspicious_events = filter oauth_events where user_agent_type in ["Automated Tooling", "Missing User Agent"]

If suspicious_events is empty:

return empty dataset with same columns as above

# STEP 6: Aggregate by user and time window

Group suspicious_events by USER_ID, user_name, time_window:

token_count = count(records)

all_user_agents = set of user_agent

all_ips = set of src_ip

first_timestamp = earliest timestamp

Filter groups where token_count >= min_token_requests

# STEP 7: Determine acquisition pattern

For each group:

If token_count >= min_token_requests * 3:

acquisition_pattern = "Rapid Token Acquisition"

Else if any user_agent in all_user_agents matches SUSPICIOUS_USER_AGENTS:

acquisition_pattern = "Automated Tooling"

Else if "Missing User Agent" detected:

acquisition_pattern = "Missing User Agent"

Else:

acquisition_pattern = "Suspicious Token Acquisition"

# STEP 8: Output enriched detection record

Output fields:

USER_ID, user_name, src_ip, acquisition_pattern, token_count,

all_user_agents, all_ips, first_timestamp

User agents in Salesforce require careful interpretation. We recommend using Salesforce Hacker to evaluate the true value hidden.

Preparing for this and the next supply chain attack

Salesforce, amongst the other SaaS platforms, is increasingly appearing as a targeted service by many adversaries. Both known and unknown.

We will soon know more about the impact SLSH had on Gainsight-linked instances and the extent of the activity. In the meantime, whether learning from this incident or planning for the next one, take responsibility for your environment. Implement a comprehensive incident response plan to avoid missing out on the checklists. Properly store and monitor your data with longer time periods to be able to evaluate your data, and, most importantly, work hard on your detections. Build identity engines to have the ability to differentiate between common and uncommon.

It’s not always easy to do that on your own, even with a full team on set. That’s why Mitiga’s here; our clients were covered, and we are ready to help strengthen your resilience as well.

LAST UPDATED:

December 3, 2025

Want to see how Mitiga helps you uncover what others miss?

Learn more about our Zero-Impact Breach Prevention platform or request a live demo.