There’s a good reason many developers are excited about the cloud. The advent of managed services has enabled solutions architecture to become an assortment of building blocks—configuration is simple, scaling is precise, and development unfolds at an unprecedented pace. Despite the many benefits, cloud-based solutions can bring hidden dangers.

The biggest risk in cloud development is not recognizing the differences between cloud and traditional definitions of common architecture terms. For example, imagine a system that is completely “firewalled off”—a firewall prevents any inbound or outbound connections from the machine. Could an adversary build malware that could still communicate with that machine over the network? The answer is less clear than you’d imagine.

Strange behavior in Google Cloud’s control plane

In this section, we describe some odd and potentially dangerous behavior within Google Cloud Platform. We discussed this with the relevant team at GCP through Google’s VRP program. They agreed with us that some documentation supporting these features could be clarified, but both Mitiga and Google agreed that the finding wasn’t a vulnerability. We at Mitiga believe that this is a potentially dangerous functionality, and misconfiguration is likely common enough to warrant concern; however, with proper access control to the GCP environment, there is no exploitable flaw.

To protect customers from the growing threat of cloud-savvy attackers, Mitiga works to identify new techniques an adversary might use as part of sophisticated cloud compromises. A few months ago, Mitiga began research on Google Cloud Platform’s (GCP) Compute virtual machine (VM) service. Similar to services such as Amazon Web Services (AWS) EC2 and Microsoft Azure VM, the service enables users to create, manage, secure, and monitor a variety of general-purpose virtual systems.

When reviewing the application programming interface (API) exposed by GCP for the Compute VM service, one API method in particular stuck out: getSerialPortOutput(). This API call enables users to retrieve output from serial ports, a legacy method of debugging systems. Interestingly, serial ports are not “ports” in the TCP/IP sense; in Linux systems, serial ports are files of the form /dev/ttySX (where X is a single-digit integer, generally 0 through 3).

This API represented an interesting opportunity: the cloud control plane would allow us to read data from these serial ports, but from the VM’s perspective, writing to a serial port was a local action in that it did not require connectivity to a foreign system. What if we created a VM within GCP that was firewalled off from all inbound and outbound traffic but was configured to write data continuously to the serial ports? Would we still be able to read the data from the serial port?

What is internal traffic, anyway?

Interestingly, we noticed that such communication was possible. We were curious how this communication would be reflected within GCP’s network monitoring capabilities. Upon review, we saw that the traffic appeared to be classified as GOOGLE_INTERNAL, which doesn’t give the casual administrator much insight into what the nature of the traffic might be. Of course, if we look at the API calls made, we see the repeated calls to getSerialPortOutput — but even that could be missed by developers unfamiliar with the specifics of GCP’s Compute VM API.

By itself, this API represents not much more than a stealthy method of exfiltration. While intriguing, it would be much more powerful if we could identify a companion API method that would enable an adversary to send data to the machine. Combined, the methods would enable complete command and control (C2) over a machine with only cloud credentials.

One peculiarity of GCP that we noticed was that GCP Compute VMs enabled users with appropriate permissions to modify machine metadata at runtime using the setMetadata API method. While other cloud providers also enabled users to assign metadata to a machine, these only allowed metadata modification for a system that was shut down. GCP VMs enabled users to set custom metadata tags with custom values, and VMs are permitted by default to read these values from the internal metadata server. When paired with getSerialPortOutput, we now have a full feedback loop and can develop C2 capabilities.

Gaining GCP Command & Control

Our final question brought us back to the scenario of a fully firewalled-off virtual machine. How would we go about loading the malware onto the system in this case? Like many cloud VM providers, GCP enables users to use setMetadata to configure “user data” that is run whenever the VM starts. This field allows cloud operations or development staff to configure the VM and ensure necessary software is installed and configured. For our purposes, we can write a small script that will enable us to control the VM remotely using the two API methods. Since any script included in user data is run as root, we further can leverage this method to ensure our malware has full administrative access to the system.

The Google Cloud Platform Attack Scenarios

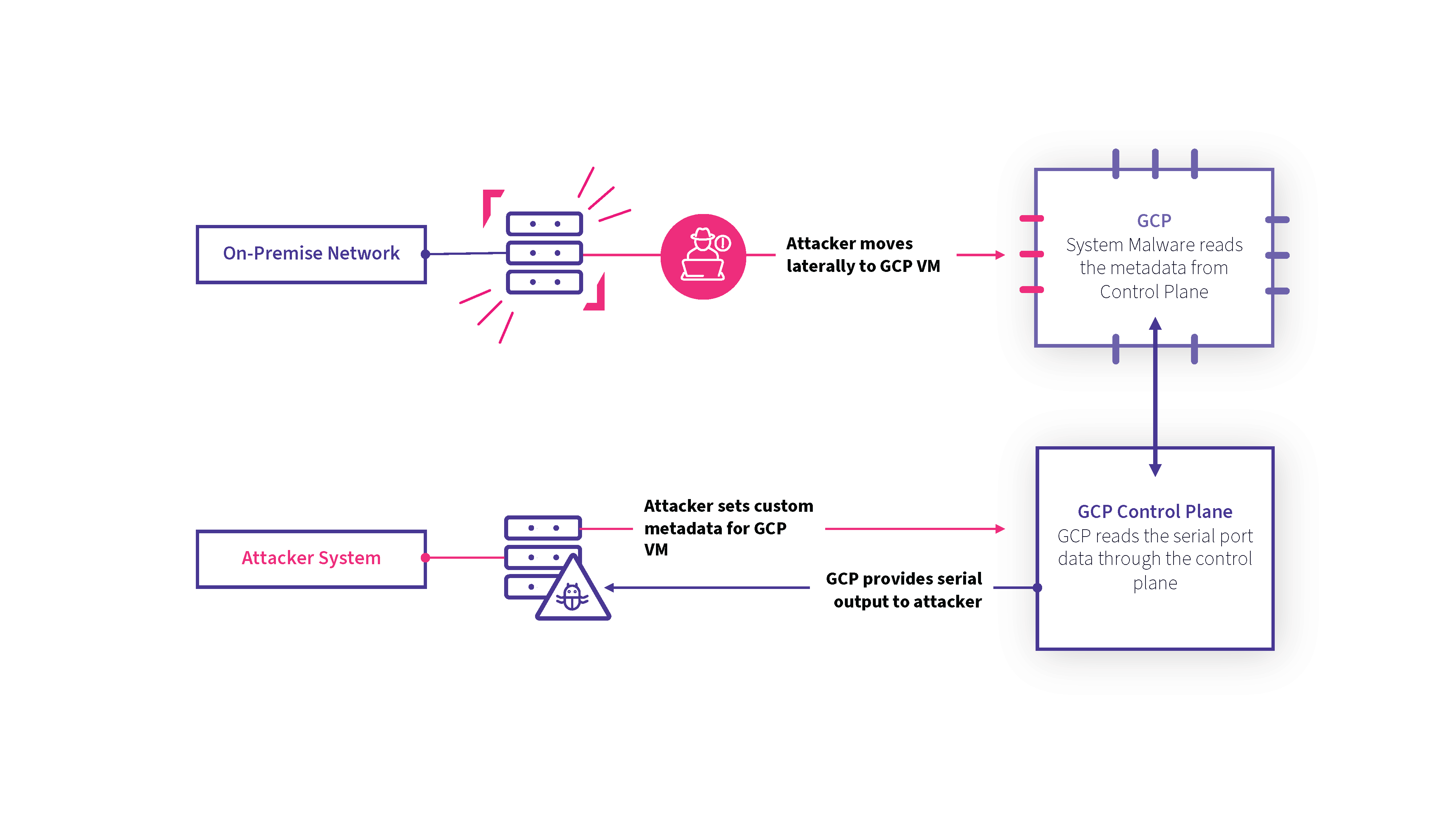

Consequently, we envisioned two potential attack scenarios:

1. An attacker gains access to Google Cloud Platform credentials with appropriate API permissions for both setMetadata and getSerialPortOutput on one or more VMs

2. Using traditional network-based methods of lateral movement, the attacker installs malware on the system that communicates using the GCP API

3. The attacker sends commands to the victim machine by inserting them into custom metadata using a predetermined key

4. The victim system continually reads the key looking for commands; when one is found, the command is executed, and the output is sent to a predetermined serial port

5. The adversary continually reads from the serial port and waits to receive the output of the command

Or alternatively:

1. An attacker gains access to GCP credentials with appropriate API permissions for setMetadata, getSerialPortOutput, and reset (that is, compute.instances.reset permissions) on one or more VMs

2. The adversary inserts the API-abusing malware into the victim’s system user data

3. The adversary “resets” the system, forcing a reboot. Upon reboot, the system will run user data as the administrative user

4. The attacker sends commands to the victim machine by inserting them into custom metadata using a predetermined key

5. The victim system continually reads the key looking for commands; when one is found, the command is executed, and the output is sent to a predetermined serial port

6. The adversary continually reads from the serial port and waits to receive the output of the command

Is it hard to apply these techniques?

It is worth mentioning that the necessary preconditions to this technique are, of course, relevant permissions to the targeted systems, which implies control of one or more sets of GCP credentials. Likewise, the setMetadata method (arguably the more powerful of the two, given that it enables a user to run commands on the targeted system) is only exposed in the Compute Instance Admin role (of course, that does not stop administrators from assigning the permission itself to a user). Hopefully, the lack of availability of this permission to users other than those given administrative access signals to developers and administrators that such permissions are quite dangerous.

Unfortunately, getSerialPortOutput is available even to low-permission “viewer” roles. This is concerning given that getSerialPortOutput enables exfiltration regardless of how firewalls are configured. An adversary could potentially use this method to stealthily exfiltrate from a system that the adversary gained access to via a traditional method. Similarly, it is our understanding that a malicious administrator of GCP systems could exfiltrate their own data from Google Cloud using the getSerialPortOutput method without having to pay for outbound data transmission charges, potentially avoiding a hefty bill if the administrator is willing to cope with an admittedly slow data throughput (a bit less than 1 MBps from our testing). This free data transmission is enabled by the fact that getSerialPortOutput method traffic is listed as GOOGLE_INTERNAL traffic and not EXTERNAL, the typical label assigned to traffic that would be billed back to the user. Mitiga has relayed these concerns to Google but has not received specific acknowledgement as to whether traffic sent through getSerialPortOutput is billed to the user or whether Google plans to add safeguards to this method.

Responsible disclosure and discussion

After discovering the dangers of these API methods, we contacted Google’s Vulnerability Research Program (VRP) to inform them of our findings. We advised the following things:

· Validate that getSerialPortOutput couldn’t be used to bypass Google’s metering for external network communication

· Restrict getSerialPortOutput to only higher-tiered roles and/or enable users to disable the API for a specific instance or project-wide

· Enable users to disable the addition or alteration of Compute VM metadata at runtime, either for a specific instance or project-wide

· Alter documentation to make it more clear that firewalls and other network controls do not entirely restrict access to VMs, and make it clear that GCP credentials with appropriate permissions enable command and control of systems without access to the subnet in which the VM resides

After a long exchange, Google did ultimately concur that certain portions of their documentation could be made clearer and agreed to make changes to documentation that indicated the control plane can access VMs regardless of firewall settings. Google did not acknowledge the other recommendations nor speak to specifics regarding whether a GCP user could evade charges by using the getSerialPortOutput method. Google rewarded the author $100 for the findings associated with the VRP notification.

Google did indicate that the VPC Service Controls (VPC-SC) feature could be used to restrict access to sensitive APIs, such as those exposed by the Compute service. Based on information provided by Google, Mitiga recommends all customers enable VPC-SC where possible to prevent unauthorized access.

It is further worth noting that Mitiga’s findings do not represent a “vulnerability” per se, but rather a potentially problematic but valid permissions model with GCP. Moreover, the exploited functionality described is not necessarily exclusive to GCP, and analogous techniques likely can be performed in other cloud control planes, such as Microsoft Azure or Amazon Web Services.

How to harden your GCP environment

For those with GCP environments concerned about mitigating the risks related to the attack scenarios identified above, take the following hardening and hunting actions to minimize risk:

Hardening:

- Don’t use built-in roles. Instead, assign specific permissions as needed to users in adherence with the principle of least privilege.

- Evaluate whether dangerous functionality such as setMetadata (and similar APIs such as setCommonInstanceMetadata) are necessary. If not, ensure that cloud credentials are configured to lack such permissions.

- Ensure systems only allow remote access via approved remote administration methods, such as SSH or RDP.

- Where possible, run applications or services using low-privileged users. Where possible, harden systems such that service accounts cannot write to serial ports.

- If you do not use the compute.instances.getSerialPortOutput feature, consider adding an IAM deny policy for Compute users for that permission

Hunting:

- Look for repeated invocations of getSerialPortOutput or setMetadata if not commonly used in the environment.

- Depending on the frequency of usage, consider performing threat hunting for invocations of such API calls.

- Review the IP addresses of clients making such API calls and ensure they originate from approved locations (for example, known employee home office IPs, organization-operated external IPs, and so on).

- Look for and investigate anomalous spikes in traffic, even if the traffic is labeled as GOOGLE_INTERNAL or similar benign-sounding labels.

Understanding the cloud control plane

Part of the challenge of transitioning to cloud computing is recognizing that common terms are “overloaded.” While cloud providers may use terms like “network” and “firewall,” they are not referring to perfectly analogous concepts. For the most part, such terms mean and act like their traditional or on-premises counterparts, but there are often dangerous exceptions. One such exception is the omnipresence of the cloud control plane. When a traditional system is “firewalled off” from inbound and outbound communication, we can say with a decent level of accuracy (Mission:Impossible style side channel attacks involving power fluctuations or blinking hard disk lights aside) that such a system cannot be compromised by a remote adversary. With cloud systems, we see that such assertions are plainly false—cloud credentials with reasonable permissions are often enough on their own to gain full control of a system, regardless of how network defenses are limited.

To cloud providers, this isn’t an issue—the shared responsibility model that underlies the premise of cloud security means that understanding the ramifications of the cloud control plane’s functionality is your responsibility; likewise, it is the user’s responsibility to ensure that credentials have the minimal appropriate permissions and are secured from potential adversaries. In reality, this is expecting too much from most cloud users. Operations and solutions architecture personnel who are more familiar with traditional systems may believe that simply translating prior system designs to the cloud grants equal or even greater levels of security, but that may not be true. In cloud systems, credentials are often just as good as access.

Update

This post was updated May 5 to clarify that Google did not consider the finding dangerous. We also added information on adding an IAM deny policy for Compute users to our hardening recommendations at Google's suggestion.

LAST UPDATED:

November 14, 2025