This past week at re:Invent, AWS announced a very cool new product feature: EKS Pod Identity. As an AWS user, and specifically an EKS (Elastic Kubernetes Service) user, I spend a great deal of time connecting my pods and workloads to other AWS services and clusters in other regions and accounts, so for me, this feature arrives just in time.

Back in 2019, AWS released the IAM roles for service accounts feature, that allows users to connect an AWS identity such as an IAM role to a K8S identity-called service account. The cluster is assigned with an OIDC (OpenID Connect) identity. Using this feature, you can map a role to be assumed by a K8S service account, alter the IAM role’s trust policy, use the sts:AssumeRoleWithWebIdentity action, and connect it to a specific service account that will be connected to a chosen pod. The pod will then be given the permissions of the policy that the IAM role is attached to. An example of the role’s trust policy is described below.

While this method provides a sensible solution for tackling the challenge of furnishing fine-grained IAM credentials to EKS pods, it comes with drawbacks, particularly in the realms of automation and complexity. To obtain credentials for each desired service account, a section in the trust policy must be manually added to the role. Automating the generation of this connection can prove challenging.

Thankfully, at this year’s re:Invent the EKS team released a new EKS Pod Identity feature that should make our lives a little bit easier. I was very excited to give it a try. Below, I’ve detailed what I did and what I learned along the way.

How challenging is the new ESK Pod Identity feature, in reality?

Embarking on the implementation of the ESK Pod Identity feature may appear challenging at first glance, yet with a systematic approach and a solid grasp of its core principles, users can unlock its potential for bolstering security and simplifying identity management in Kubernetes clusters.

Cluster creation and node group

I created a new cluster and as part of its creation added the Amazon EKS Pod Identity Agent add-on.

I wanted my new pod to be able to place files in a designated bucket and folder. The bucket is located in a different AWS account. I created a node group in the newly created cluster as well.

IAM role creation

The trust policy should include the following statement which will allow the pods.eks.amazonaws.com service to assume this role.

I attached a policy allowing to putObject in the wanted bucket and folder:

After the IAM role was set I moved on to create the EKS resources.

EKS resources

I created a basic pod and a service account for it and applied both on the K8S cluster, note that I did not need to add the eks.amazonaws.com/role-arn annotation to the service account:

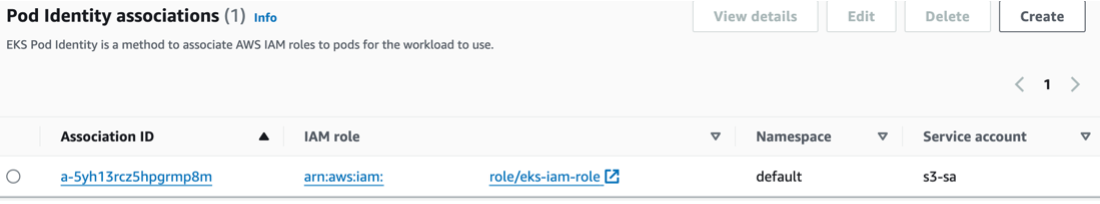

After creating these elements, I moved forward and created the association:

Our cluster has a new Access tab, which allows us to create IAM roles and pods connections.

I created a new association and inserted the role name and the service account name of the resources I created in earlier steps:

That’s it! We’re connected!

Internal credentials magic

Logging into the pod, I can see two new environment variables that were not there before:

- AWS_CONTAINER_AUTHORIZATION_TOKEN_FILE which provides the credentials token file path.

- AWS_CONTAINER_CREDENTIALS_FULL_URI which supplies the URI of the API for querying to obtain the necessary credentials.

These two variables are injected into the pod during its creation and association to the service account.

Bucket

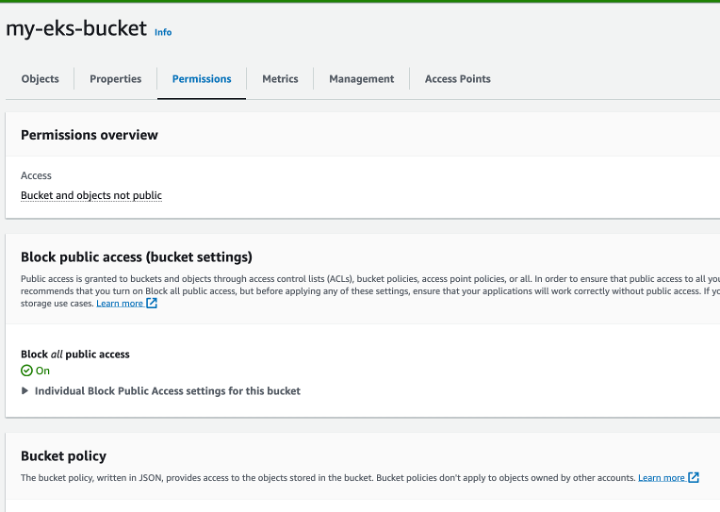

Before we test our association and try to put objects in the bucket using our pod’s new credentials, there is one last thing to add. Because we used cross account permissions (the bucket is in a different account), we now need to adjust the bucket policy with the statement that allows our role to put objects in the relevant folder.

I scrolled into the bucket, to the permissions tab and edited the bucket policy with the policy presented:

After completing this step, we can try testing our association. As of now, the AWS CLI does not support authenticating through EKS Pod Identity, but other AWS SDKs do support.

What do we EKS admins get out of this new AWS feature?

- Simplified Automated IAM Roles. These IAM associations can be automated and created through CLI, and you won’t have to edit the file manually every time you create a new cluster/ identity that needs to assume the role. In general, using IAM roles for service accounts instead of plain users is of course the least privileged more secured way of providing credentials to pods.

- Trust Relations Pod Limitations. You can limit your trust policy with a Condition to decide which pods can assume this role.

- Process on AWS, EKS-free. The entire process is applied through AWS without having to include any EKS resources such as add this eks.amazonaws.com/role-arn annotation to the service account.

With EKS Pod Identity, AWS has introduced a new feature that truly enhances a user’s comprehension of K8S credentials, making them easier to manage. The feature is incredibly user-friendly, and I encourage all EKS admins to begin utilizing it.